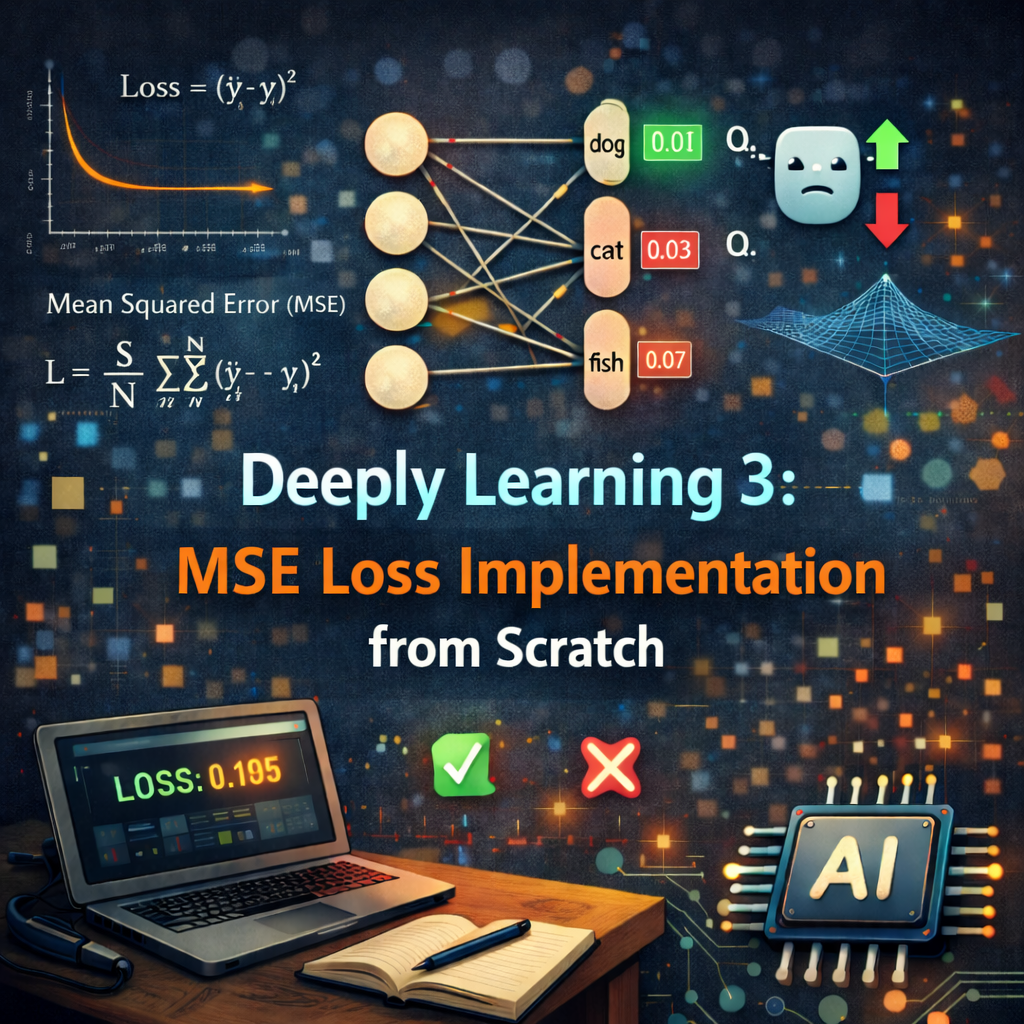

Deeply Learning 3: Mean Squared Error Loss Implementation from Scratch

Deeply learning one concept at a time. In this post, we will implement mean squared error loss from scratch.

Deeply learning one concept at a time. In this post, we will implement mean squared error loss from scratch.

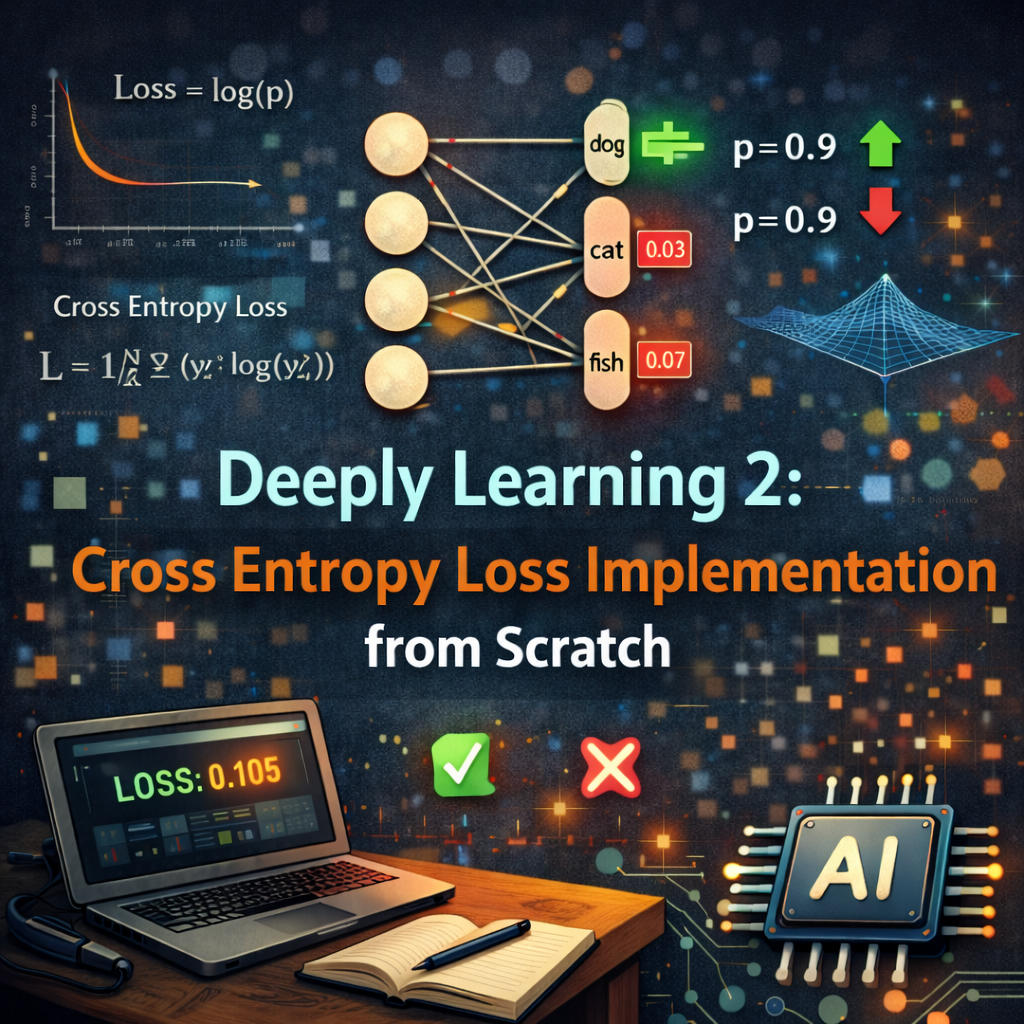

Deeply learning one concept at a time. In this post, we implement cross entropy loss from scratch.

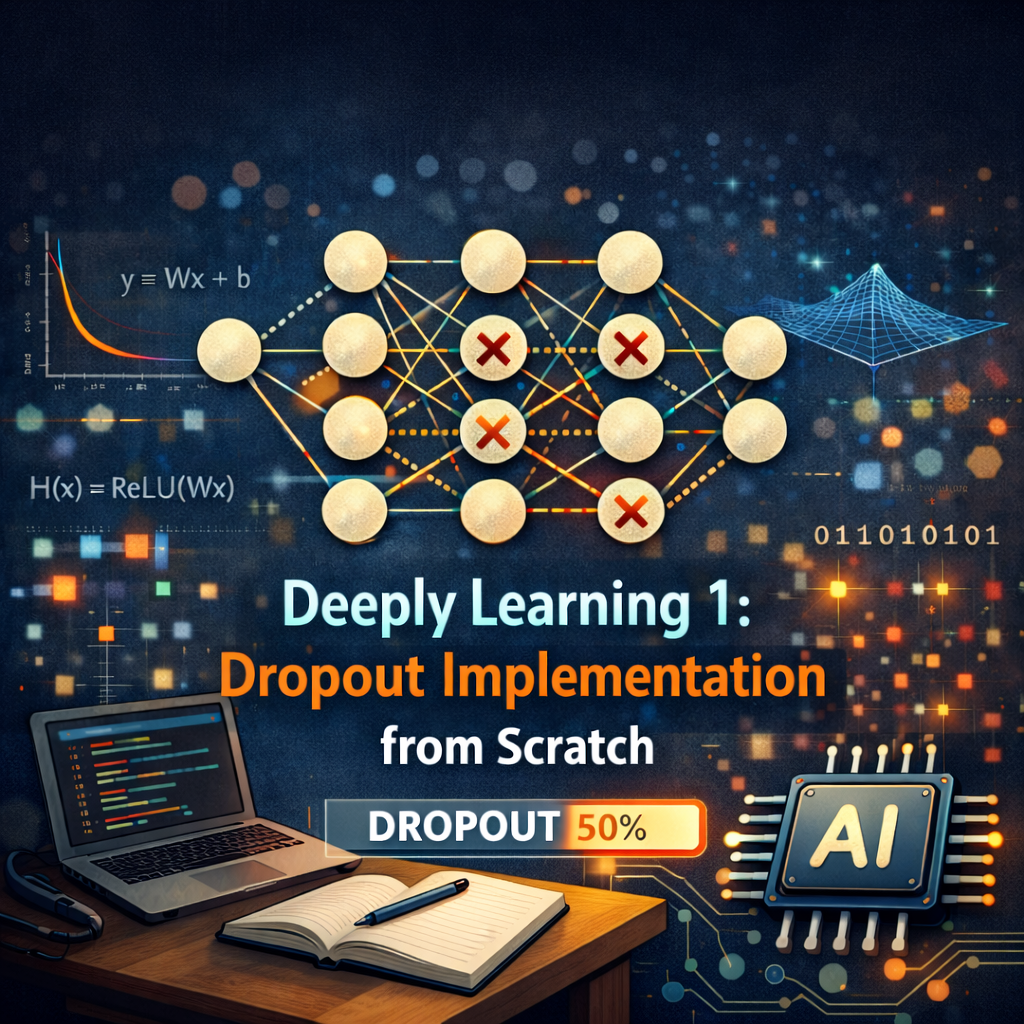

Deeply learning one concept at a time. In this post, we implement simple dropout from scratch.

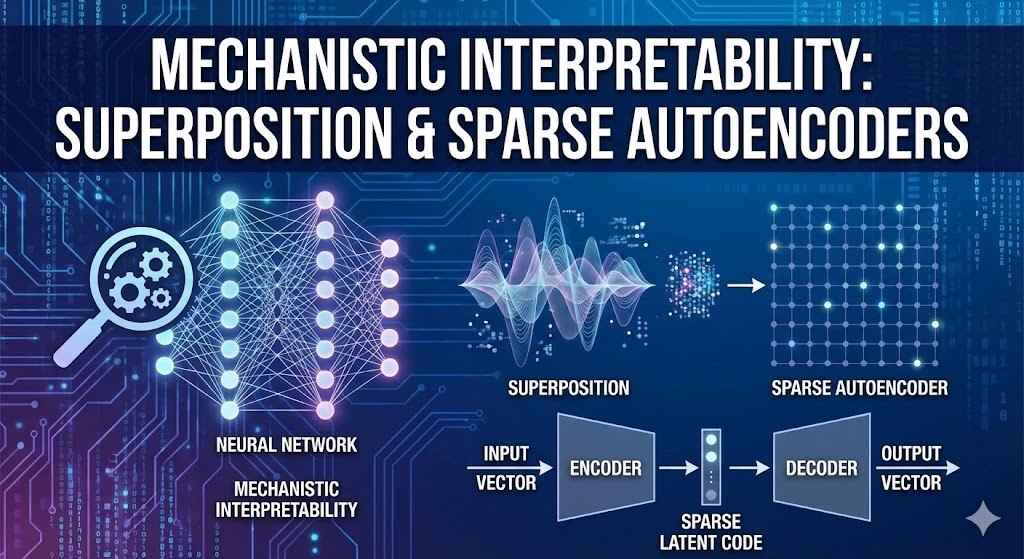

In this post, we will explore the concepts of Superposition and Sparse Autoencoders in the context of mechanistic interpretability. We'll build a spar...

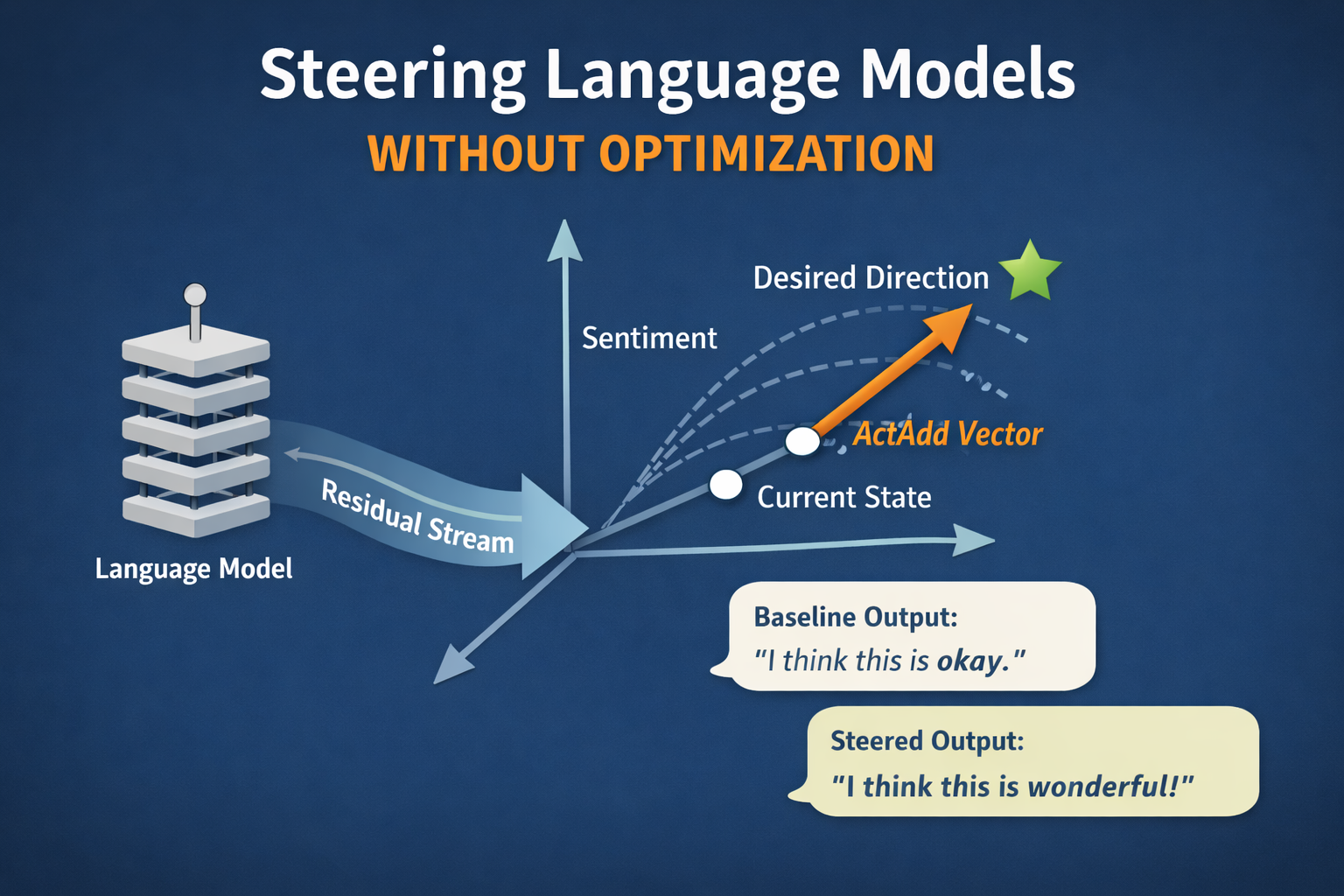

Implement Activation Addition (ActAdd) and Contrastive Activation Addition (CAA) to steer language models at inference time without training. Learn how adding vectors to the residual stream changes behavior, with practical code implementations and analysis of both methods.

In this post, we're going to build GPT-2 from the ground up, implementing every component ourselves and understanding exactly how this remarkable architecture works.

Learn how attention mechanisms work in transformers by visualizing what LLMs see when processing text. Discover how attention connects semantically related tokens (like Paris → French), understand the Query-Key-Value framework, and explore how different attention heads specialize in syntax, semantics, and coreference.

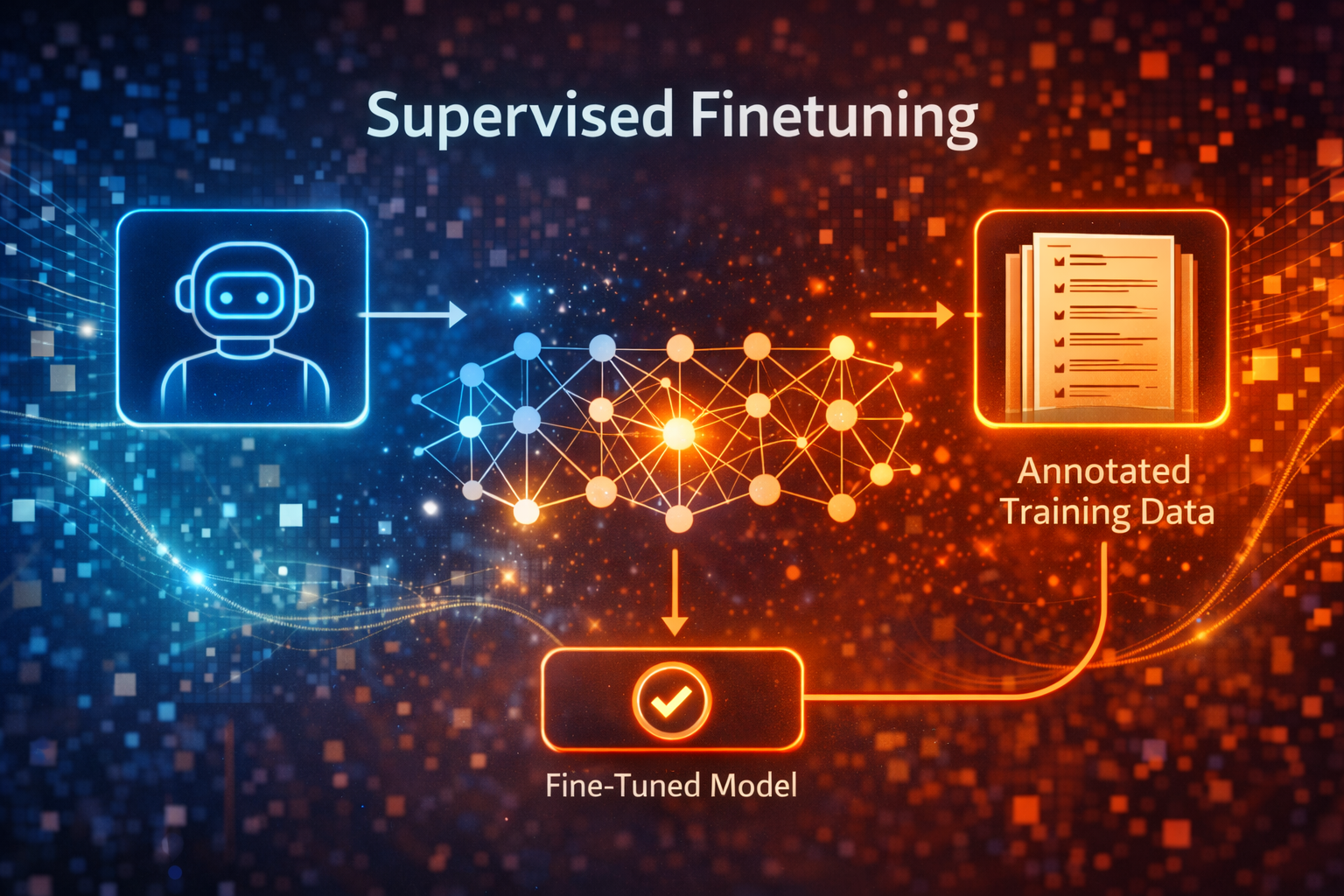

Learn how supervised fine-tuning (SFT) fits into the LLM training pipeline. This post explains the three-step process (pretraining → SFT → alignment), demonstrates SFT implementation with a practical example, and shows how fine-tuning transforms a base model into a task-specific assistant.

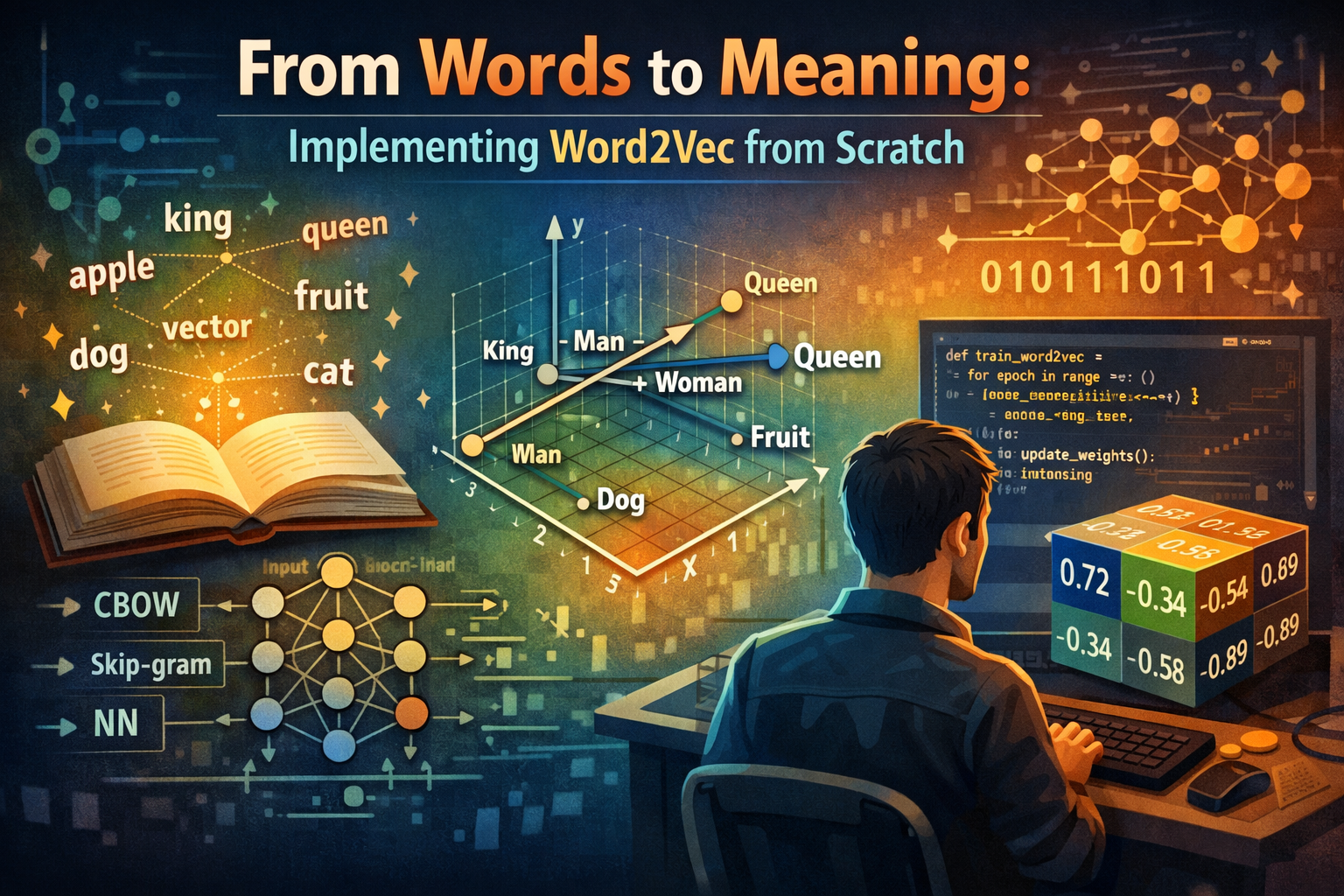

Word embeddings are one of the most transformative developments in Natural Language Processing (NLP). They solve a fundamental problem: how can we rep...

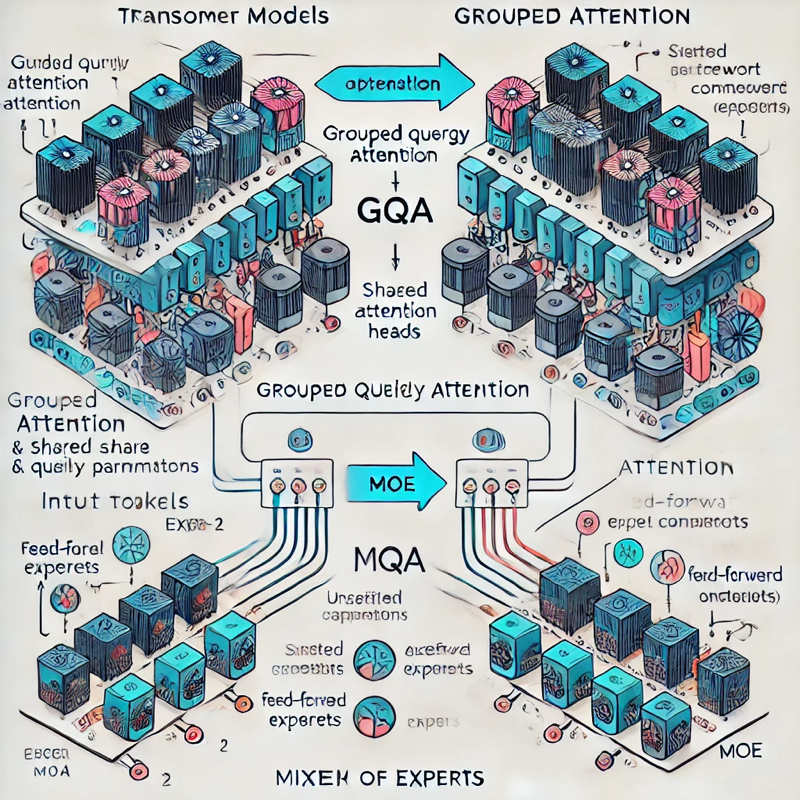

Exploring model architecture optimizations for Large Language Model (LLM) inference, focusing on Group Query Attention (GQA) and Mixture of Experts (MoE) techniques.